Privacy-preserving machine learning with tensor networks

1Group of Applied Physics, University of Geneva, 1211 Geneva 4, Switzerland

2Constructor Institute, 8200 Schaffhausen, Switzerland

3Instituto de Ciencias Matemáticas (CSIC-UAM-UC3M-UCM), 28049 Madrid, Spain

4Departamento de Análisis Matemático, Universidad Complutense de Madrid, 28040 Madrid, Spain

5Departamento de Matemática Aplicada a la Ingeniería Industrial, Universidad Politécnica de Madrid, 28006 Madrid, Spain

6Departamento de Álgebra, Geometría y Topología, Universidad Complutense de Madrid, 28040 Madrid, Spain

7Escuela Técnica Superior de Ingeniería de Sistemas Informáticos, Universidad Politécnica de Madrid, 28031 Madrid, Spain

| Published: | 2024-07-25, volume 8, page 1425 |

| Eprint: | arXiv:2202.12319v3 |

| Doi: | https://doi.org/10.22331/q-2024-07-25-1425 |

| Citation: | Quantum 8, 1425 (2024). |

Find this paper interesting or want to discuss? Scite or leave a comment on SciRate.

Abstract

Tensor networks, widely used for providing efficient representations of low-energy states of local quantum many-body systems, have been recently proposed as machine learning architectures which could present advantages with respect to traditional ones. In this work we show that tensor-network architectures have especially prospective properties for privacy-preserving machine learning, which is important in tasks such as the processing of medical records. First, we describe a new privacy vulnerability that is present in feedforward neural networks, illustrating it in synthetic and real-world datasets. Then, we develop well-defined conditions to guarantee robustness to such vulnerability, which involve the characterization of models equivalent under gauge symmetry. We rigorously prove that such conditions are satisfied by tensor-network architectures. In doing so, we define a novel canonical form for matrix product states, which has a high degree of regularity and fixes the residual gauge that is left in the canonical forms based on singular value decompositions. We supplement the analytical findings with practical examples where matrix product states are trained on datasets of medical records, which show large reductions on the probability of an attacker extracting information about the training dataset from the model's parameters. Given the growing expertise in training tensor-network architectures, these results imply that one may not have to be forced to make a choice between accuracy in prediction and ensuring the privacy of the information processed.

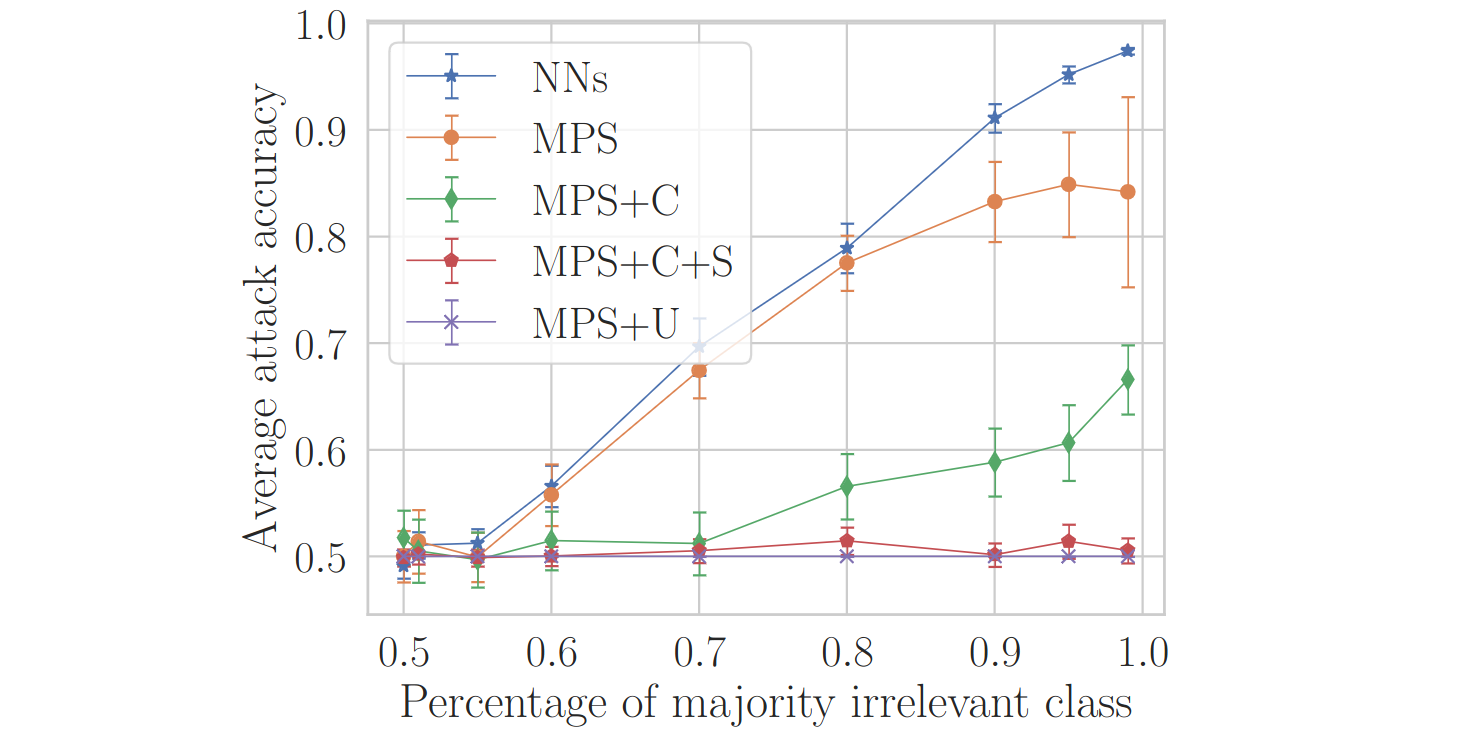

Featured image: Accuracy of attacks attempting to predict the majority value of a feature of the training data that is irrelevant for the task in which the models have been trained, for neural networks (NNs) and tensor networks (MPS) subject to different strategies for fixing the gauge. Importantly, the tensor networks that have not been protected by choosing a representative of all gauge-equivalent models are vulnerable in a similar way to the neural networks. Moreover, it is known that the standard way of choosing a gauge representative does not completely fix the gauge, and we see that in this case (the green curve denoted by MPS+C) some information can be extracted. This information is erased when randomizing over the residual freedom (the red curve denoted by MPS+C+S), or by using a new way of fixing the gauge that we develop in the work (the purple curve denoted by MPS+U).

Presentation “Privacy-preserving machine learning with tensor networks” by Alejandro Pozas Kerstjens at PIRSA Perimeter Institute.

Popular summary

► BibTeX data

► References

[1] Apple. ``Differential privacy overview''. https://www.apple.com/privacy/docs/Differential_Privacy_Overview.pdf (2021). Accessed: 2021-12-02.

https://www.apple.com/privacy/docs/Differential_Privacy_Overview.pdf

[2] Google. ``How we’re helping developers with differential privacy''. https://developers.googleblog.com/2021/01/how-were-helping-developers-with-differential-privacy.html (2021). Accessed: 2021-12-02.

https://developers.googleblog.com/2021/01/how-were-helping-developers-with-differential-privacy.html

[3] Cynthia Dwork, Frank McSherry, Kobbi Nissim, and Adam Smith. ``Calibrating noise to sensitivity in private data analysis''. J. Priv. Confid. 7, 17––51 (2017).

https://doi.org/10.29012/jpc.v7i3.405

[4] Stanley L. Warner. ``Randomized response: A survey technique for eliminating evasive answer bias''. J. Am. Stat. Assoc. 60, 63–69 (1965).

https://doi.org/10.2307/2283137

[5] Cynthia Dwork and Aaron Roth. ``The algorithmic foundations of differential privacy''. Found. Trends Theor. Comput. Sci. 9, 211–407 (2014).

https://doi.org/10.1561/0400000042

[6] NatHai Phan, Xintao Wu, and Dejing Dou. ``Preserving differential privacy in convolutional deep belief networks''. Mach. Learn. 106, 1681–1704 (2017). arXiv:1706.08839.

https://doi.org/10.1007/s10994-017-5656-2

arXiv:1706.08839

[7] Martin Abadi, Andy Chu, Ian Goodfellow, H. Brendan McMahan, Ilya Mironov, Kunal Talwar, and Li Zhang. ``Deep learning with differential privacy''. In Proceedings of the 2016 ACM SIGSAC Conference on Computer and Communications Security. Pages 308–318. CCS '16New York, NY, USA (2016). Association for Computing Machinery. arXiv:1607.00133.

https://doi.org/10.1145/2976749.2978318

arXiv:1607.00133

[8] Christian Collberg, Jack Davidson, Roberto Giacobazzi, Yuan Xiang Gu, Amir Herzberg, and Fei-Yue Wang. ``Toward digital asset protection''. IEEE Intell. Syst. 26, 8–13 (2011).

https://doi.org/10.1109/MIS.2011.106

[9] Frank Verstraete, Valentin Murg, and J. Ignacio Cirac. ``Matrix product states, projected entangled pair states, and variational renormalization group methods for quantum spin systems''. Adv. Phys. 57, 143–224 (2008). arXiv:0907.2796.

https://doi.org/10.1080/14789940801912366

arXiv:0907.2796

[10] J. Ignacio Cirac, David Pérez-García, Norbert Schuch, and Frank Verstraete. ``Matrix product states and projected entangled pair states: Concepts, symmetries, theorems''. Rev. Mod. Phys. 93, 045003 (2021). arXiv:2011.12127.

https://doi.org/10.1103/RevModPhys.93.045003

arXiv:2011.12127

[11] E. Miles Stoudenmire and David J. Schwab. ``Supervised learning with tensor networks''. In Advances in Neural Information Processing Systems. Volume 29, pages 4799–4807. Curran Associates, Inc. (2016). arXiv:1605.05775.

arXiv:1605.05775

https://proceedings.neurips.cc/paper/2016/hash/5314b9674c86e3f9d1ba25ef9bb32895-Abstract.html

[12] Alexander Novikov, Mikhail Trofimov, and Ivan V. Oseledets. ``Exponential machines''. Bull. Pol. Acad. Sci.: Tech. Sci. 66, 789–797 (2018). arXiv:1605.03795.

https://doi.org/10.24425/bpas.2018.125926

arXiv:1605.03795

[13] E. Miles Stoudenmire. ``Learning relevant features of data with multi-scale tensor networks''. Quantum Sci. Technol. 3, 034003 (2018). arXiv:1801.00315.

https://doi.org/10.1088/2058-9565/aaba1a

arXiv:1801.00315

[14] Ivan Glasser, Nicola Pancotti, and J. Ignacio Cirac. ``From probabilistic graphical models to generalized tensor networks for supervised learning''. IEEE Access 8, 68169–68182 (2020). arXiv:1806.05964.

https://doi.org/10.1109/ACCESS.2020.2986279

arXiv:1806.05964

[15] Raghavendra Selvan and Erik B. Dam. ``Tensor networks for medical image classification''. In Proceedings of the Third Conference on Medical Imaging with Deep Learning. Volume 121 of Proceedings of Machine Learning Research, pages 721–732. PMLR (2020). arXiv:2004.10076.

arXiv:2004.10076

https://proceedings.mlr.press/v121/selvan20a.html

[16] Jinhui Wang, Chase Roberts, Guifre Vidal, and Stefan Leichenauer. ``Anomaly detection with tensor networks'' (2020). arXiv:2006.02516.

arXiv:2006.02516

[17] Jacob Miller, Guillaume Rabusseau, and John Terilla. ``Tensor networks for probabilistic sequence modeling''. In Proceedings of The 24th International Conference on Artificial Intelligence and Statistics. Volume 130 of Proceedings of Machine Learning Research, pages 3079–3087. PMLR (2021). arXiv:2003.01039.

arXiv:2003.01039

https://proceedings.mlr.press/v130/miller21a.html

[18] Javier Lopez-Piqueres, Jing Chen, and Alejandro Perdomo-Ortiz. ``Symmetric tensor networks for generative modeling and constrained combinatorial optimization''. Mach. Learn.: Sci. Technol. 4, 035009 (2023). arXiv:2211.09121.

https://doi.org/10.1088/2632-2153/ace0f5

arXiv:2211.09121

[19] Jutho Haegeman, Michaël Mariën, Tobias J. Osborne, and Frank Verstraete. ``Geometry of matrix product states: Metric, parallel transport, and curvature''. J. Math. Phys. 55, 021902 (2014). arXiv:1210.7710.

https://doi.org/10.1063/1.4862851

arXiv:1210.7710

[20] Giuseppe Carleo, Ignacio Cirac, Kyle Cranmer, Laurent Daudet, Maria Schuld, Naftali Tishby, Leslie Vogt-Maranto, and Lenka Zdeborová. ``Machine learning and the physical sciences''. Rev. Mod. Phys. 91, 045002 (2019). arXiv:1903.10563.

https://doi.org/10.1103/RevModPhys.91.045002

arXiv:1903.10563

[21] Alexander Radovic, Mike Williams, David Rousseau, Michael Kagan, Daniele Bonacorsi, Alexander Himmel, Adam Aurisano, Kazuhiro Terao, and Taritree Wongjirad. ``Machine learning at the energy and intensity frontiers of particle physics''. Nature 560, 41–48 (2018).

https://doi.org/10.1038/s41586-018-0361-2

[22] Juan Carrasquilla. ``Machine learning for quantum matter''. Adv. Phys.: X 5, 1797528 (2020). arXiv:2003.11040.

https://doi.org/10.1080/23746149.2020.1797528

arXiv:2003.11040

[23] Joaquin F. Rodriguez-Nieva and Mathias S. Scheurer. ``Identifying topological order through unsupervised machine learning''. Nat. Phys. 15, 790–795 (2019).

https://doi.org/10.1038/s41567-019-0512-x

[24] Murphy Yuezhen Niu, Sergio Boixo, Vadim Smelyanskiy, and Hartmut Neven. ``Universal quantum control through deep reinforcement learning''. npj Quantum Inf. 5, 33 (2019). arXiv:1803.01857.

https://doi.org/10.1038/s41534-019-0141-3

arXiv:1803.01857

[25] Thomas Fösel, Petru Tighineanu, Talitha Weiss, and Florian Marquardt. ``Reinforcement learning with neural networks for quantum feedback''. Phys. Rev. X 8, 031084 (2018). arXiv:1802.05267.

https://doi.org/10.1103/PhysRevX.8.031084

arXiv:1802.05267

[26] Naftali Tishby, Fernando C. Pereira, and William Bialek. ``The information bottleneck method'' (2000). arXiv:physics/0004057.

arXiv:physics/0004057

[27] H. Chau Nguyen, Riccardo Zecchina, and Johannes Berg. ``Inverse statistical problems: from the inverse Ising problem to data science''. Adv. Phys. 66, 197–261 (2017). arXiv:1702.01522.

https://doi.org/10.1080/00018732.2017.1341604

arXiv:1702.01522

[28] Eric W. Tramel, Marylou Gabrié, Andre Manoel, Francesco Caltagirone, and Florent Krzakala. ``Deterministic and generalized framework for unsupervised learning with restricted Boltzmann machines''. Phys. Rev. X 8, 041006 (2018). arXiv:1702.03260.

https://doi.org/10.1103/PhysRevX.8.041006

arXiv:1702.03260

[29] Alejandro Pozas-Kerstjens, Gorka Muñoz-Gil, Eloy Piñol, Miguel Ángel García-March, Antonio Acín, Maciej Lewenstein, and Przemysław R Grzybowski. ``Efficient training of energy-based models via spin-glass control''. Mach. Learn.: Sci. Technol. 2, 025026 (2021). arXiv:1910.01592.

https://doi.org/10.1088/2632-2153/abe807

arXiv:1910.01592

[30] Alejandro Pozas-Kerstjens, Senaida Hernández-Santana, and David Pérez-García. ``Computational appendix of Physics solutions to machine learning privacy leaks''. Zenodo 6302728, (2022).

https://doi.org/10.5281/zenodo.6302728

[31] Global.health. ``a data science initiative''. https://global.health (2021). Accessed: 2021-03-22.

https://global.health

[32] Giuseppe Ateniese, Luigi V. Mancini, Angelo Spognardi, Antonio Villani, Domenico Vitali, and Giovanni Felici. ``Hacking smart machines with smarter ones: How to extract meaningful data from machine learning classifiers''. Int. J. Secur. Netw. 10, 137–150 (2015). arXiv:1306.4447.

https://doi.org/10.1504/IJSN.2015.071829

arXiv:1306.4447

[33] Reza Shokri, Marco Stronati, Congzheng Song, and Vitaly Shmatikov. ``Membership inference attacks against machine learning models''. In 2017 IEEE Symposium on Security and Privacy (SP). Pages 3–18. (2017). arXiv:1610.05820.

https://doi.org/10.1109/SP.2017.41

arXiv:1610.05820

[34] David Pérez-García, Frank Verstraete, Michael M. Wolf, and J. Ignacio Cirac. ``Matrix product state representations''. Quantum Inf. Comput. 7, 401––430 (2007). arXiv:quant-ph/0608197.

https://doi.org/10.26421/QIC7.5-6-1

arXiv:quant-ph/0608197

[35] Guifré Vidal. ``Efficient classical simulation of slightly entangled quantum computations''. Phys. Rev. Lett. 91, 147902 (2003). arXiv:quant-ph/0301063.

https://doi.org/10.1103/PhysRevLett.91.147902

arXiv:quant-ph/0301063

[36] Ivan V. Oseledets. ``A new tensor decomposition''. Dokl. Math. 80, 495–496 (2009).

https://doi.org/10.1134/S1064562409040115

[37] Ivan V. Oseledets. ``Tensor-train decomposition''. SIAM J. Sci. Comput. 33, 2295–2317 (2011).

https://doi.org/10.1137/090752286

[38] Sander Wahls, Visa Koivunen, H. Vincent Poor, and Michel Verhaegen. ``Learning multidimensional Fourier series with tensor trains''. In 2014 IEEE Global Conference on Signal and Information Processing (GlobalSIP). Pages 394–398. (2014).

https://doi.org/10.1109/GlobalSIP.2014.7032146

[39] Zhongming Chen, Kim Batselier, Johan A. K. Suykens, and Ngai Wong. ``Parallelized tensor train learning of polynomial classifiers''. IEEE Trans. Neural Netw. Learn. Syst. 29, 4621–4632 (2018). arXiv:1612.06505.

https://doi.org/10.1109/TNNLS.2017.2771264

arXiv:1612.06505

[40] Nikos Kargas and Nicholas D. Sidiropoulos. ``Supervised learning and canonical decomposition of multivariate functions''. IEEE Trans. Signal Process. 69, 1097–1107 (2021).

https://doi.org/10.1109/TSP.2021.3055000

[41] Frederiek Wesel and Kim Batselier. ``Large-scale learning with fourier features and tensor decompositions''. In Advances in Neural Information Processing Systems. Volume 34, pages 17543–17554. Curran Associates, Inc. (2021). arXiv:2109.01545.

arXiv:2109.01545

https://proceedings.neurips.cc/paper/2021/hash/92a08bf918f44ccd961477be30023da1-Abstract.html

[42] Krishore B. Marathe and Giovanni Martucci. ``The mathematical foundations of gauge theories''. North Holland Publishing Co. (1992).

[43] Ivan Oseledets and Eugene Tyrtyshnikov. ``TT-cross approximation for multidimensional arrays''. Linear Algebra Appl. 432, 70–88 (2010).

https://doi.org/10.1016/j.laa.2009.07.024

[44] Florian Tramèr, Fan Zhang, Ari Juels, Michael K. Reiter, and Thomas Ristenpart. ``Stealing machine learning models via prediction APIs''. In 25th USENIX Security Symposium (USENIX Security 16). Pages 601–618. USENIX Association (2016). arXiv:1609.02943.

arXiv:1609.02943

https://www.usenix.org/conference/usenixsecurity16/technical-sessions/presentation/tramer

[45] Matthew Jagielski, Nicholas Carlini, David Berthelot, Alex Kurakin, and Nicolas Papernot. ``High accuracy and high fidelity extraction of neural networks''. In 29th USENIX Security Symposium (USENIX Security 20). Pages 1345–1362. USENIX Association (2020). arXiv:1909.01838.

arXiv:1909.01838

https://www.usenix.org/conference/usenixsecurity20/presentation/jagielski

[46] András Molnar, José Garre-Rubio, David Pérez-García, Norbert Schuch, and J. Ignacio Cirac. ``Normal projected entangled pair states generating the same state''. New J. Phys. 20, 113017 (2018). arXiv:1804.04964.

https://doi.org/10.1088/1367-2630/aae9fa

arXiv:1804.04964

[47] Nicholas D. Sidiropoulos and Rasmus Bro. ``On the uniqueness of multilinear decomposition of $N$-way arrays''. J. Chemometrics 14, 229–239 (2000).

https://doi.org/10.1002/1099-128X(200005/06)14:3<229::AID-CEM587>3.0.CO;2-N

[48] Ding Liu, Shi-Ju Ran, Peter Wittek, Cheng Peng, Raúl Blázquez García, Gang Su, and Maciej Lewenstein. ``Machine learning by unitary tensor network of hierarchical tree structure''. New J. Phys. 21, 073059 (2019). arXiv:1710.04833.

https://doi.org/10.1088/1367-2630/ab31ef

arXiv:1710.04833

[49] Jiahao Su, Wonmin Byeon, Jean Kossaifi, Furong Huang, Jan Kautz, and Anima Anandkumar. ``Convolutional tensor-train lstm for spatio-temporal learning''. In Advances in Neural Information Processing Systems. Volume 33, pages 13714–13726. Curran Associates, Inc. (2020). arXiv:2002.09131.

arXiv:2002.09131

https://proceedings.neurips.cc/paper/2020/hash/9e1a36515d6704d7eb7a30d783400e5d-Abstract.html

[50] Xindian Ma, Peng Zhang, Shuai Zhang, Nan Duan, Yuexian Hou, Dawei Song, and Ming Zhou. ``A tensorized transformer for language modeling''. In Advances in Neural Information Processing Systems. Volume 32, pages 2232–2242. Curran Associates Inc. (2019). arXiv:1906.09777.

arXiv:1906.09777

https://proceedings.neurips.cc/paper/2019/hash/dc960c46c38bd16e953d97cdeefdbc68-Abstract.html

[51] Maxim Kuznetsov, Daniil Polykovskiy, Dmitry P Vetrov, and Alex Zhebrak. ``A prior of a googol gaussians: a tensor ring induced prior for generative models''. In Advances in Neural Information Processing Systems. Volume 32, pages 4102–4112. Curran Associates, Inc. (2019). arXiv:1910.13148.

arXiv:1910.13148

https://proceedings.neurips.cc/paper/2019/hash/4cb811134b9d39fc3104bd06ce75abad-Abstract.html

[52] Song Cheng, Lei Wang, and Pan Zhang. ``Supervised learning with projected entangled pair states''. Phys. Rev. B 103, 125117 (2021). arXiv:2009.09932.

https://doi.org/10.1103/PhysRevB.103.125117

arXiv:2009.09932

[53] Boaz Barak, Oded Goldreich, Russell Impagliazzo, Steven Rudich, Amit Sahai, Salil Vadhan, and Ke Yang. ``On the (im)possibility of obfuscating programs''. J. ACM 59, 1–48 (2012).

https://doi.org/10.1145/2160158.2160159

Cited by

[1] José Ramón Pareja Monturiol, David Pérez-García, and Alejandro Pozas-Kerstjens, "TensorKrowch: Smooth integration of tensor networks in machine learning", Quantum 8, 1364 (2024).

[2] Elena Peña Tapia, Giannicola Scarpa, and Alejandro Pozas-Kerstjens, "A didactic approach to quantum machine learning with a single qubit", Physica Scripta 98 5, 054001 (2023).

[3] Javier Lopez-Piqueres, Jing Chen, and Alejandro Perdomo-Ortiz, "Symmetric tensor networks for generative modeling and constrained combinatorial optimization", Machine Learning: Science and Technology 4 3, 035009 (2023).

[4] Arturo Acuaviva, Visu Makam, Harold Nieuwboer, David Pérez-García, Friedrich Sittner, Michael Walter, and Freek Witteveen, "The minimal canonical form of a tensor network", arXiv:2209.14358, (2022).

[5] Matthias Christandl, Vladimir Lysikov, Vincent Steffan, Albert H. Werner, and Freek Witteveen, "The resource theory of tensor networks", arXiv:2307.07394, (2023).

The above citations are from SAO/NASA ADS (last updated successfully 2024-09-01 02:31:19). The list may be incomplete as not all publishers provide suitable and complete citation data.

On Crossref's cited-by service no data on citing works was found (last attempt 2024-09-01 02:31:18).

This Paper is published in Quantum under the Creative Commons Attribution 4.0 International (CC BY 4.0) license. Copyright remains with the original copyright holders such as the authors or their institutions.